A short history of data tooling

As we begin to introduce Twirl to the world, it makes sense to contextualize it within the broader world of data tooling over the past decade or so. How did we get here? Why do we think Twirl should exist?

History

As the internet commercialized in the 2000s, many newly formed companies struggled to leverage the large amounts of data they were accumulating. Google’s 2004 MapReduce paradigm and Hadoop ecosystem built around it made analyzing large data sets feasible, but required huge infrastructure and personnel investments.

Then in 2010 with Apache Hive a SQL-like interface was added to the map reduce world, and in the next three years BigQuery, Redshift, and Snowflake were released, popularizing columnar cloud MPP (“massively parallel processing”) databases using the whole SQL standard and making analysis accessible without expertise in distributed computing.

The arrival of approachable, fast compute over large datasets changed the approach companies were taking to analytics, and also dramatically increased the ROI on analytics efforts. Just a few years after columnar cloud warehouses were established, new tools arrived that pioneered ways of working that took advantage of these systems to create better user experiences: Looker, with its data modeling language and runtime datasets, and dbt, with its pre-computation oriented dataset curation.

Looker bet that the cost of compute would continue to fall (in both time and dollars) and therefore constructing and running complex SQL queries from user definitions would be increasingly viable– and optimizing queries by hand less important.

At the other end, dbt was born out of the observation that while MPP warehouses had incredible processing power, and SQL is surprisingly expressive, it would often require several steps of transformation to get data from its raw stage to a place where it provided insight. Doing all of these steps in a single query meant repeating SQL that could have been reused, but also meant queries began to hit resource limitations in the warehouses themselves. By computing each step one at a time and persisting the intermediate results, dbt provided a principled approach to constructing clean, valuable datasets out of noisy and disparate inputs.

dbt’s separation of long SQL queries into logical pieces also represented a step forward in organizing data analysis across a company. Since each individual piece of SQL could be understood and reused, teams of analysts working together could construct much more useful high level abstractions from raw data over time.

In parallel, forward thinking data companies like Spotify and Airbnb were wrestling with similar issues of coordination and organization but without the unifying thesis of SQL in an MPP warehouse— they used Hadoop oriented technologies, but still needed a way of sequencing steps within their data flow to allow the re-use of derived datasets and efficiently use limited resources. Their solutions, Luigi and Airflow, reflected the remarkable usefulness of DAGs and dataflow programming, as well as the use of python as the imperative lingua franca of data work.

As the cloud warehouses matured and it became apparent that SQL was sticking around (in the early 2010s MongoDB and NoSQL seemed ascendant), many companies solidified onto a similar looking set of technologies by 2018, dubbed the Modern Data Stack: Fivetran, Airflow, dbt, Snowflake, Looker.

The state of things

This solution worked well for those who could make the investment. Snowflake, Looker, and Fivetran are all expensive, and both dbt and Looker require extensive upfront effort to model data before seeing any payoff. However companies that successfully implemented these technologies found that they had the ability to quickly answer questions about their business and build reliable data driven workflows and automation.

Containers and serverless infrastructure became mainstream, superseding the celery based execution engine at the heart of Airflow. Airflow launched operators to take advantage of the newer serverless options, but they always felt bolted on, and 2019 brought both Dagster and Prefect as open core tools attempting to replace it— Dagster with its “Software Defined Assets” concept taking ideas from dbt, and Prefect with its focus on monitoring and reliability.

Of the tools in the MDS, dbt has perhaps been the most successful, staving off real competition until very recently. However, mature data teams almost universally discover use cases that require more than just SQL in a warehouse: machine learning, working with APIs, or generating a visualization and posting it to Slack all require switching from dbt to an orchestrator and losing some of the benefit of representing work as a DAG.

Airflow, dbt, Prefect, and Dagster all launched hosted cloud options, but running dbt inside an orchestrator is messy, since these systems either don’t communicate or do so in an imperfect way. Relying on dbt cloud alone constrains you to only running table transformations in the data warehouse, and gives you no tools at all for handling files, running arbitrary containers, or scheduling dependent jobs at differing cadences.

Increasingly the bottom of the MDS is also expanding upwards, with Snowflake and BigQuery releasing many features designed to make the warehouse a more general solution for performing machine learning and other non-SQL friendly workloads. A drawback is that many companies find these tools (and Snowflake in particular) to be unpredictably expensive, so moving more workloads there without an easy off-ramp may result in being stuck in an untenable cost situation.

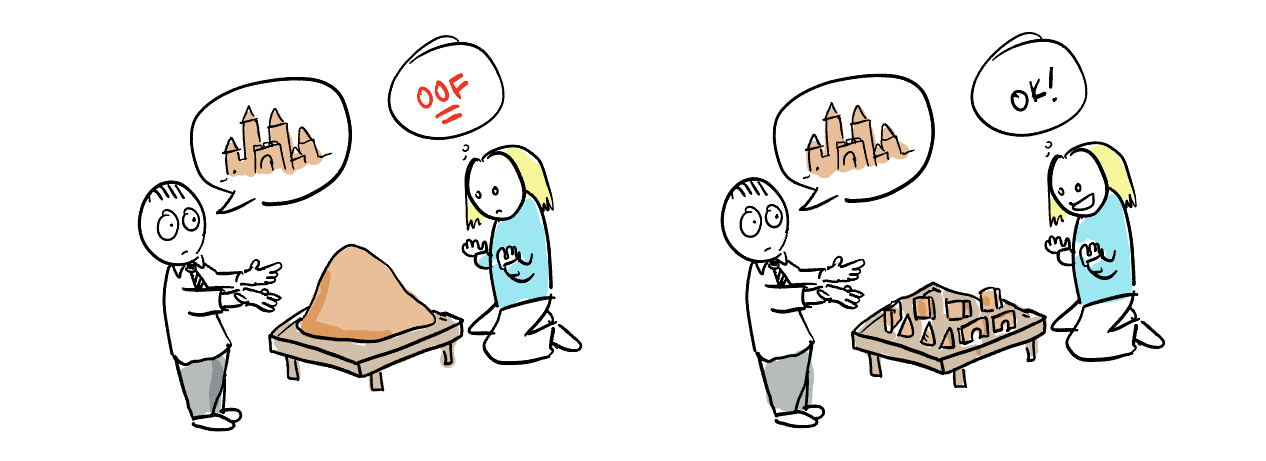

Our view

We’ve observed that while these tools provide great building blocks, in practice companies have to make expensive hires and spend months (or sometimes years 😳) stitching these tools together to achieve a platform they can build value on top of. We’ve done this several times at companies big and small and we’ve observed that the same solution would work well at each company, despite differences in company size, industry, or existing technology.

Our ambition with Twirl is to build this solution one more time, in a way that generalizes across companies and combines ease of getting started with the maturity of a platform you won’t ever grow out of.

By designing the orchestrator and transformation layer to work together from the outset we can avoid the time and cost of stitching solutions together, and along the way we can provide the little affordances we know from experience most teams would otherwise end up building themselves at some point.

The ideal solution

Against this background, we think today the ideal data tool for most teams should be:

Instant startup: We don’t think teams should spend even a day on cloud infrastructure and setting up their platform— that first day you should deploy the first running models. We think some permissions should be provisioned and the rest should just work™️.

Language agnostic: We think teams should be able to do their simple modeling in SQL, but easily switch to Python + Pandas when the situation calls for it, without sacrificing the local dev experience, data lineage, or anything else. We go beyond this, with arbitrary containers so you can do data transformation in any language.

Cloud agnostic: With Snowflake and BigQuery increasingly competing with each other with deeply integrated features, it becomes harder and harder to switch from one to the other. We aspire to provide abstractions to allow toggling between warehouse solutions, preventing risk from lock-in.

Of course there are other features we believe are important when you’re working with data:

Secure by default: Data is safest at home, and should never leave the VPC or cloud account of its owner just to power some data pipeline.

Great local dev experience: Iterating on data jobs locally with real production data is a must, as is version control and CI/CD.

Support for files: Many data teams are productive using only SQL in a warehouse, but most eventually find they need to work with files as well, particularly for data sharing and/or machine learning.

Modality agnostic: We’ve seen that low latency eventually becomes a requirement for many companies, so we want our abstractions to work equally well for batch or streaming transformations.

Unconstrained: While we know usually at first a few python and SQL scripts will get us where we need to go, we also know eventually we’ll need more control. We think a framework should make the usual things easy, but never limit you when your situation gets weird (sure, run that F# container with 120GiB of RAM!)

Plain code: We hate not being able to copy and paste production code into REPLs, IDEs, notebooks, etc. The ideal solution has no python decorators, no jinja template bindings, nothing but the raw code that needs to run.

And so many more— cost control features built in, cron free scheduling, and data contracts across any language, to name a few. Eventually we see a platform where all data work can be done in one place, and anyone who can write code can put data products into production with confidence.

So that’s what we’re building! Current customers use Twirl for everything from analytics projects with hundreds of tables to powering user facing apps with data. We’re currently onboarding new companies, so please reach out if you think Twirl would be a good fit for your needs.